When looking up information about stars or any other celestial bodies, we’re always given some numbers expressing their magnitude, which can be apparent or absolute. For example, the Sun has an apparent magnitude of -27, the full moon -13 or Venus -5. But, what’s the deal with these numbers anyway? How do we calculate them?

First of all, in astronomy the magnitude is a logarithmic scale of the brightness of on object at a certain wavelength (optical, infra-red etc.)

History

The Greek astronomer Hipparchus invented about 2000 years ago a magnitude system that classified the stars by their size. With the naked eye, a star such as Sirius seems larger than Mizar, which in turn appears larger than a faint star such as Alcor. According to this system, the brightest stars are “1st-class” stars, while the faintest are “6th-class” stars; the brighter the star is the more negative its magnitude is.

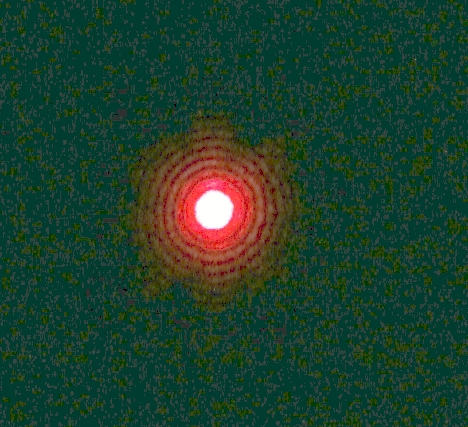

This classification remained valid well into the 19th century. Even if scientists had telescopes, stars were seen as central bright spots surrounded by faint concentric rings (Airy disks). So the physical size of the body continued to be misinterpreted because of the spurious rings.

Airy disk of Betelgeuse. © spiff.rit.edu

In the second part of the 19th century, stellar parallax was used to determine distances to the stars. It was then when astronomers understood that stars appear as point sources because they are very far away. By the time the diffraction of light as well as the astronomical seeing were explained, it was understood how the apparent sizes of stars depended on the intensity of light coming from a star (i.e. the apparent brightness of a star). Then photometry showed that the 1st class stars were about 100 times brighter than the 6th class stars. In 1856 a standard ratio of ![]()

2.512 between magnitudes was proposed by Norman R. Pogson of Oxford. Using it, the difference of five magnitudes is a factor of 100 in brightness. This new system was adapted and it is the one used nowadays. The intrinsic magnitude or the brightness of the stars is measured, and not their apparent magnitude. Note that using this logarithmic scale a star may be brighter than a 1st class star. For instance, Arcturus is magnitude 0 and Sirius is magnitude -1.46.

Apparent Magnitude

Two stars, one with apparent magnitude m1 and flux F1 (flux is the luminosity per unit area at a distance d, ![]()

Jm-2s-1),and another star with magnitude m2 and flux F2 are related by the following formula;

Using this method the magnitude scale goes beyond the 6-class magnitude system known since Antiquity. It is more than that in the way that it doesn’t only classify stars, but it also measures their brightness. Furthermore, magnitudes can be calculated for any celestial body (such as planets, moons), not just the stars.

Living in an era dominated by technology has its advantages. Modern telescopes are among them. Seeing through every wavelength allows us to measure even the faintest of the objects. The Hubble Space Telescope can analyze objects of the 30th magnitude.

Apparent and absolute magnitude

The apparent magnitude is the apparent brightness of an object. It depends on distance. Closer-to-Earth objects have higher apparent magnitudes than distanced ones. The absolute magnitude measures the luminosity of on object and it’s distance independent as it is defined as the apparent magnitude of an object at a standard distance (10 pc for stars). For example, the star Betelgeuse is more luminous than the star Sirius. However, Sirius is closer to us than Betelgeuse so its magnitude has a lower value.

The absolute magnitude can be calculated from the apparent magnitude using the distance modulus;

where m is the apparent magnitude, M is the absolute magnitude, d is the distance to the star measured in parsecs.